Jash Naik

Neural Network Security: Defending AI Systems Against Adversarial Attacks

Neural networks have become the backbone of modern AI applications, from autonomous vehicles to medical diagnosis systems. However, these powerful models are vulnerable to a wide range of sophisticated attacks that can compromise their integrity, availability, and confidentiality. This comprehensive guide explores the current threat landscape and practical defense strategies for securing AI systems.

Executive Summary

- Neural networks face unique security challenges beyond traditional software

- Adversarial attacks can cause misclassification with minimal input perturbations

- Model poisoning and data manipulation pose significant threats during training

- Defense requires multi-layered approach: robust training, detection, and monitoring

- Emerging threats include model stealing, membership inference, and backdoor attacks

Understanding the Threat Landscape

Adversarial Examples

Adversarial examples are carefully crafted inputs designed to fool neural networks into making incorrect predictions. These attacks exploit the high-dimensional nature of neural network input spaces and can be nearly imperceptible to humans.

Real-world Impact:

- Autonomous Vehicles: Slightly modified stop signs can be misclassified as speed limit signs

- Face Recognition: Adversarial patches can cause person misidentification

- Medical AI: Manipulated medical images can lead to misdiagnosis

Model Poisoning Attacks

These attacks corrupt the training process by injecting malicious data into training datasets, causing models to learn incorrect patterns.

- Data poisoning: Injecting mislabeled samples into training data

- Backdoor attacks: Creating hidden triggers that activate malicious behavior

- Model replacement: Substituting legitimate models with compromised versions

- Gradient-based attacks: Manipulating model updates in federated learning

Privacy Attacks

Machine learning models can inadvertently reveal sensitive information about their training data or model architecture.

Key Attack Vectors:

- Membership Inference: Determining if specific data was used in training

- Model Inversion: Reconstructing training data from model parameters

- Model Extraction: Stealing model functionality through query-based attacks

- Property Inference: Learning aggregate properties of training datasets

Defensive Strategies and Implementation

1. Adversarial Training

The most effective defense against adversarial examples is training models on adversarially perturbed data.

import torch

import torch.nn.functional as F

from torch.autograd import Variable

class AdversarialTrainer:

def __init__(self, model, optimizer, epsilon=0.3, alpha=0.01, iterations=10):

self.model = model

self.optimizer = optimizer

self.epsilon = epsilon # Maximum perturbation bound

self.alpha = alpha # Step size for PGD attack

self.iterations = iterations

def pgd_attack(self, images, labels):

"""Generate adversarial examples using Projected Gradient Descent"""

images = Variable(images.data, requires_grad=True)

# Random initialization within epsilon ball

perturbed = images + torch.empty_like(images).uniform_(-self.epsilon, self.epsilon)

perturbed = torch.clamp(perturbed, 0, 1)

for i in range(self.iterations):

perturbed.requires_grad_()

outputs = self.model(perturbed)

loss = F.cross_entropy(outputs, labels)

# Compute gradients

grad = torch.autograd.grad(loss, perturbed,

retain_graph=False, create_graph=False)[0]

# Update perturbation

perturbed = perturbed.data + self.alpha * grad.sign()

perturbed = torch.max(torch.min(perturbed, images + self.epsilon),

images - self.epsilon)

perturbed = torch.clamp(perturbed, 0, 1)

return perturbed.detach()

def train_step(self, images, labels):

"""Training step with adversarial examples"""

# Generate adversarial examples

adv_images = self.pgd_attack(images, labels)

# Train on mix of clean and adversarial examples

mixed_images = torch.cat([images, adv_images], dim=0)

mixed_labels = torch.cat([labels, labels], dim=0)

outputs = self.model(mixed_images)

loss = F.cross_entropy(outputs, mixed_labels)

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

return loss.item()2. Input Preprocessing and Detection

Implement robust input validation and anomaly detection to identify suspicious inputs.

import numpy as np

from sklearn.ensemble import IsolationForest

from scipy import stats

import torch.nn.functional as F

class AdversarialDetector:

def __init__(self, model, contamination=0.1):

self.model = model

self.isolation_forest = IsolationForest(contamination=contamination)

self.benign_statistics = {}

def extract_features(self, x):

"""Extract statistical features for anomaly detection"""

features = []

# Statistical moments

features.extend([np.mean(x), np.std(x), stats.skew(x.flatten()),

stats.kurtosis(x.flatten())])

# Frequency domain features

fft = np.fft.fft2(x)

features.extend([np.mean(np.abs(fft)), np.std(np.abs(fft))])

# Gradient-based features

grad_x = np.gradient(x, axis=1)

grad_y = np.gradient(x, axis=0)

features.extend([np.mean(np.abs(grad_x)), np.mean(np.abs(grad_y))])

return np.array(features)

def fit_detector(self, benign_samples):

"""Train detector on benign samples"""

features = np.array([self.extract_features(x) for x in benign_samples])

self.isolation_forest.fit(features)

# Store benign statistics for comparison

self.benign_statistics = {

'mean': np.mean(features, axis=0),

'std': np.std(features, axis=0)

}

def detect_adversarial(self, x, confidence_threshold=0.5):

"""Detect if input is adversarial"""

features = self.extract_features(x).reshape(1, -1)

# Isolation Forest detection

anomaly_score = self.isolation_forest.decision_function(features)[0]

# Statistical deviation check

z_scores = np.abs((features[0] - self.benign_statistics['mean']) /

self.benign_statistics['std'])

max_z_score = np.max(z_scores)

# Model confidence check

with torch.no_grad():

outputs = self.model(torch.tensor(x).unsqueeze(0).float())

confidence = torch.max(F.softmax(outputs, dim=1)).item()

# Combined detection logic

is_adversarial = (anomaly_score < -0.5 or

max_z_score > 3.0 or

confidence < confidence_threshold)

return {

'is_adversarial': is_adversarial,

'anomaly_score': anomaly_score,

'max_z_score': max_z_score,

'confidence': confidence

}3. Model Robustness Techniques

- Defensive Distillation: Train models with softened probability distributions

- Gradient Masking: Reduce gradient information available to attackers

- Input Transformations: Apply random transforms to break adversarial perturbations

- Ensemble Methods: Use multiple models to increase attack difficulty

- Certified Defenses: Provide mathematical guarantees against attacks

4. Secure Model Training Pipeline

import hashlib

import json

from datetime import datetime

import torch

from cryptography.fernet import Fernet

class SecureTrainingPipeline:

def __init__(self, model_name, encryption_key=None):

self.model_name = model_name

self.training_log = []

self.data_hash = None

# Initialize encryption for sensitive data

if encryption_key:

self.cipher = Fernet(encryption_key)

else:

self.cipher = Fernet(Fernet.generate_key())

def validate_training_data(self, dataset):

"""Validate integrity of training data"""

# Compute dataset hash for integrity checking

data_string = str(sorted([str(sample) for sample in dataset]))

self.data_hash = hashlib.sha256(data_string.encode()).hexdigest()

# Check for data anomalies

self._detect_data_anomalies(dataset)

self.log_event("Data validation completed", {"data_hash": self.data_hash})

return True

def _detect_data_anomalies(self, dataset):

"""Detect potential data poisoning"""

import numpy as np

from collections import Counter

# Convert dataset to analyzable format

if hasattr(dataset, '__len__') and len(dataset) > 0:

# Extract labels if dataset has them

try:

if hasattr(dataset[0], '__len__') and len(dataset[0]) > 1:

# Assume (data, label) pairs

labels = [item[1] if isinstance(item[1], (int, str)) else item[1].item()

for item in dataset if len(item) > 1]

data_samples = [item[0] for item in dataset]

else:

# Data only

data_samples = list(dataset)

labels = None

except:

data_samples = list(dataset)

labels = None

# 1. Label distribution analysis

if labels:

label_counts = Counter(labels)

total_samples = len(labels)

# Check for extremely imbalanced classes (potential poisoning indicator)

for label, count in label_counts.items():

ratio = count / total_samples

if ratio > 0.95 or ratio < 0.005: # Very imbalanced

self.log_event("Suspicious label distribution detected",

{"label": label, "ratio": ratio})

# Check for unexpected label values

if isinstance(labels[0], (int, float)):

expected_range = (0, max(10, max(labels)))

outlier_labels = [l for l in labels if l < expected_range[0] or l > expected_range[1]]

if outlier_labels:

self.log_event("Outlier labels detected", {"outliers": len(outlier_labels)})

# 2. Statistical checks for outliers in data

if data_samples and hasattr(data_samples[0], 'shape'):

# For tensor/array data

try:

# Flatten and analyze data distribution

flattened_data = []

for sample in data_samples[:1000]: # Sample for efficiency

if hasattr(sample, 'flatten'):

flattened_data.extend(sample.flatten().tolist())

elif hasattr(sample, 'numpy'):

flattened_data.extend(sample.numpy().flatten().tolist())

if flattened_data:

data_array = np.array(flattened_data)

# Check for extreme values

mean_val = np.mean(data_array)

std_val = np.std(data_array)

outliers = np.abs(data_array - mean_val) > 5 * std_val

outlier_ratio = np.sum(outliers) / len(data_array)

if outlier_ratio > 0.05: # More than 5% outliers

self.log_event("High outlier ratio in data",

{"outlier_ratio": outlier_ratio})

# Check for suspicious data ranges

if np.min(data_array) < -1000 or np.max(data_array) > 1000:

self.log_event("Suspicious data value ranges",

{"min": float(np.min(data_array)),

"max": float(np.max(data_array))})

except Exception as e:

self.log_event("Error in statistical analysis", {"error": str(e)})

# 3. Sample similarity analysis (simplified)

if len(data_samples) > 10:

try:

# Check for duplicate samples (potential poisoning)

if hasattr(data_samples[0], 'shape'):

# Convert to hashable format for duplicate detection

sample_hashes = []

for sample in data_samples[:1000]: # Sample for efficiency

if hasattr(sample, 'numpy'):

hash_val = hash(sample.numpy().tobytes())

elif hasattr(sample, 'tobytes'):

hash_val = hash(sample.tobytes())

else:

hash_val = hash(str(sample))

sample_hashes.append(hash_val)

unique_samples = len(set(sample_hashes))

duplicate_ratio = 1 - (unique_samples / len(sample_hashes))

if duplicate_ratio > 0.3: # More than 30% duplicates

self.log_event("High duplicate sample ratio",

{"duplicate_ratio": duplicate_ratio})

except Exception as e:

self.log_event("Error in similarity analysis", {"error": str(e)})

def secure_model_save(self, model, filepath, metadata=None):

"""Securely save model with integrity checks"""

# Create model checkpoint

checkpoint = {

'model_state_dict': model.state_dict(),

'model_name': self.model_name,

'training_log': self.training_log,

'data_hash': self.data_hash,

'timestamp': datetime.now().isoformat(),

'metadata': metadata or {}

}

# Compute model hash

model_hash = self._compute_model_hash(model)

checkpoint['model_hash'] = model_hash

# Encrypt sensitive information

encrypted_log = self.cipher.encrypt(json.dumps(self.training_log).encode())

checkpoint['encrypted_log'] = encrypted_log

# Save with integrity verification

torch.save(checkpoint, filepath)

self.log_event("Model saved securely", {"model_hash": model_hash})

def verify_model_integrity(self, filepath):

"""Verify loaded model hasn't been tampered with"""

checkpoint = torch.load(filepath)

# Verify hashes match

if checkpoint.get('data_hash') != self.data_hash:

raise ValueError("Training data hash mismatch - potential tampering")

# Verify model hash

model_hash = checkpoint.get('model_hash')

if not self._verify_model_hash(checkpoint['model_state_dict'], model_hash):

raise ValueError("Model hash mismatch - potential tampering")

return checkpoint

def _compute_model_hash(self, model):

"""Compute hash of model parameters"""

param_str = ""

for param in model.parameters():

param_str += str(param.data.cpu().numpy().tobytes())

return hashlib.sha256(param_str.encode()).hexdigest()

def _verify_model_hash(self, state_dict, expected_hash):

"""Verify model parameters match expected hash"""

# Reconstruct hash from state dict

param_str = ""

# Sort parameters by name for consistent hashing

sorted_params = sorted(state_dict.items())

for param_name, param_tensor in sorted_params:

# Convert tensor to bytes for hashing

if hasattr(param_tensor, 'cpu'):

param_bytes = param_tensor.cpu().numpy().tobytes()

elif hasattr(param_tensor, 'numpy'):

param_bytes = param_tensor.numpy().tobytes()

else:

param_bytes = str(param_tensor).encode()

param_str += str(param_bytes)

# Compute hash

computed_hash = hashlib.sha256(param_str.encode()).hexdigest()

# Compare with expected hash

if computed_hash != expected_hash:

self.log_event("Model hash verification failed", {

"expected": expected_hash,

"computed": computed_hash

})

return False

self.log_event("Model hash verification successful", {

"hash": computed_hash

})

return True

def log_event(self, event, metadata=None):

"""Log training events for audit trail"""

log_entry = {

'timestamp': datetime.now().isoformat(),

'event': event,

'metadata': metadata or {}

}

self.training_log.append(log_entry)Critical Implementation Challenges

Technical Challenges

- Performance Trade-offs: Robust models often sacrifice accuracy for security

- Scalability Issues: Defense mechanisms must work at production scale

- Evolving Threats: Attackers continuously develop new attack methods

- False Positive Management: Detection systems can flag legitimate inputs

- Resource Constraints: Defense mechanisms require additional computation

Operational Challenges

Model Lifecycle Security: Securing models throughout development, deployment, and maintenance phases requires comprehensive governance frameworks.

Threat Intelligence: Organizations need continuous monitoring of new attack vectors and defense techniques in the rapidly evolving ML security landscape.

Skills Gap: Limited availability of professionals with expertise in both machine learning and cybersecurity creates implementation challenges.

Real-World Case Studies and Lessons Learned

Case Study 1: Tesla Autopilot Adversarial Attack (2018)

Incident: Researchers demonstrated that small stickers placed on road signs could cause Tesla’s Autopilot to misinterpret speed limit signs.

Impact: Highlighted vulnerabilities in real-world deployment of neural networks for safety-critical applications.

Lessons Learned:

- Computer vision systems need robust validation against adversarial inputs

- Safety-critical applications require multiple verification layers

- Regular security testing should include adversarial robustness evaluation

Case Study 2: Microsoft Tay Chatbot (2016)

Incident: Microsoft’s AI chatbot learned inappropriate behavior from adversarial users who coordinated to feed it biased training data.

Impact: Demonstrated how real-time learning systems can be manipulated through data poisoning attacks.

Lessons Learned:

- Online learning systems need robust content filtering

- Human oversight is crucial for AI systems that learn from user interactions

- Rapid response mechanisms are needed to mitigate damage from successful attacks

Advanced Defense Strategies

Differential Privacy for Model Protection

import torch

import numpy as np

from opacus import PrivacyEngine

class DifferentiallyPrivateTrainer:

def __init__(self, model, optimizer, noise_multiplier=1.0, max_grad_norm=1.0):

self.model = model

self.optimizer = optimizer

self.privacy_engine = PrivacyEngine()

# Attach privacy engine to model and optimizer

self.model, self.optimizer, _ = self.privacy_engine.make_private(

module=model,

optimizer=optimizer,

data_loader=None, # Will be set during training

noise_multiplier=noise_multiplier,

max_grad_norm=max_grad_norm,

)

def train_with_privacy(self, train_loader, epochs, target_epsilon=1.0, target_delta=1e-5):

"""Train model with differential privacy guarantees"""

self.model.train()

for epoch in range(epochs):

for batch_idx, (data, target) in enumerate(train_loader):

self.optimizer.zero_grad()

output = self.model(data)

loss = torch.nn.functional.cross_entropy(output, target)

loss.backward()

self.optimizer.step()

# Check privacy budget

epsilon, best_alpha = self.privacy_engine.accountant.get_privacy_spent(

target_delta

)

if epsilon >= target_epsilon:

print(f"Privacy budget exhausted: ε={epsilon:.2f}")

return epoch, batch_idx

if batch_idx % 100 == 0:

print(f'Epoch {epoch}, Batch {batch_idx}, ε={epsilon:.2f}')

return epochs, len(train_loader)Federated Learning Security

import torch

import numpy as np

from typing import List, Dict

import hashlib

class SecureFederatedLearning:

def __init__(self, global_model, num_clients, byzantine_tolerance=0.3):

self.global_model = global_model

self.num_clients = num_clients

self.byzantine_tolerance = byzantine_tolerance

self.client_contributions = {}

def aggregate_with_byzantine_resilience(self, client_updates: List[Dict]):

"""Aggregate client updates with Byzantine fault tolerance"""

# Extract parameter updates

param_updates = []

for update in client_updates:

flattened = self._flatten_params(update['model_params'])

param_updates.append(flattened)

param_updates = np.array(param_updates)

# Apply Krum aggregation for Byzantine resilience

aggregated_params = self._krum_aggregation(param_updates)

# Update global model

self._update_global_model(aggregated_params)

return self.global_model.state_dict()

def _krum_aggregation(self, updates, f=None):

"""Krum aggregation rule for Byzantine robustness"""

if f is None:

f = int(self.byzantine_tolerance * len(updates))

n = len(updates)

scores = []

# Calculate Krum scores for each update

for i in range(n):

distances = []

for j in range(n):

if i != j:

dist = np.linalg.norm(updates[i] - updates[j])

distances.append(dist)

# Sum of distances to n-f-2 closest updates

distances.sort()

score = sum(distances[:n-f-2])

scores.append(score)

# Select update with minimum score

selected_idx = np.argmin(scores)

return updates[selected_idx]

def verify_client_integrity(self, client_id: str, update: Dict) -> bool:

"""Verify integrity of client update"""

# Check update hash

expected_hash = update.get('hash')

if not expected_hash:

return False

# Recompute hash

params_str = str(sorted(update['model_params'].items()))

computed_hash = hashlib.sha256(params_str.encode()).hexdigest()

if computed_hash != expected_hash:

print(f"Hash mismatch for client {client_id}")

return False

# Additional integrity checks

if self._detect_gradient_anomalies(update['model_params']):

print(f"Gradient anomalies detected for client {client_id}")

return False

return True

def _detect_gradient_anomalies(self, params: Dict) -> bool:

"""Detect anomalous gradients that may indicate attacks"""

# Check for unusually large parameter values

for param_name, param_value in params.items():

if torch.max(torch.abs(param_value)) > 10.0: # Threshold

return True

return False

def _flatten_params(self, params: Dict) -> np.ndarray:

"""Flatten model parameters into a single vector"""

flattened = []

for param in params.values():

flattened.extend(param.flatten().cpu().numpy())

return np.array(flattened)

def _update_global_model(self, aggregated_params: np.ndarray):

"""Update global model with aggregated parameters"""

# Reshape and update model parameters

param_idx = 0

with torch.no_grad():

for name, param in self.global_model.named_parameters():

# Calculate parameter size

param_size = param.numel()

# Extract corresponding parameters from aggregated array

param_data = aggregated_params[param_idx:param_idx + param_size]

# Reshape to match parameter shape

param_tensor = torch.tensor(param_data).reshape(param.shape)

# Update parameter

param.copy_(param_tensor)

param_idx += param_size

# Log the update

print(f"Global model updated with {param_idx} parameters")

# Optional: Validate parameter ranges

for name, param in self.global_model.named_parameters():

if torch.isnan(param).any() or torch.isinf(param).any():

print(f"Warning: Invalid values detected in parameter {name}")

# Could implement parameter clipping or other remediation hereIndustry Best Practices and Frameworks

1. ML Security Development Lifecycle

- Threat Modeling: Identify attack vectors specific to your ML application

- Secure Data Pipeline: Implement data validation and integrity checks

- Adversarial Testing: Regular evaluation against known attack methods

- Continuous Monitoring: Real-time detection of anomalous model behavior

- Incident Response: Prepared procedures for handling security breaches

2. Regulatory Compliance and Standards

NIST AI Risk Management Framework: Provides guidelines for managing AI-related risks including security vulnerabilities.

EU AI Act: Requires high-risk AI systems to implement robust security measures and undergo conformity assessments.

ISO/IEC 27090: International standard for AI security management systems.

3. Organizational Security Measures

import logging

from datetime import datetime

from typing import Dict, List, Any

import json

class MLSecurityFramework:

def __init__(self, application_name: str, risk_level: str = "high"):

self.application_name = application_name

self.risk_level = risk_level

self.security_controls = {}

self.audit_log = []

# Setup logging for security events

logging.basicConfig(

filename=f'{application_name}_security.log',

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

self.logger = logging.getLogger(__name__)

def implement_control(self, control_id: str, control_type: str,

config: Dict[str, Any]):

"""Implement a security control"""

control = {

'id': control_id,

'type': control_type,

'config': config,

'implemented_date': datetime.now().isoformat(),

'status': 'active'

}

self.security_controls[control_id] = control

self.logger.info(f"Security control {control_id} implemented")

# Audit trail

self.audit_log.append({

'action': 'control_implemented',

'control_id': control_id,

'timestamp': datetime.now().isoformat()

})

def validate_input_security(self, input_data: Any, model_name: str) -> Dict:

"""Comprehensive input validation and threat detection"""

validation_results = {

'is_safe': True,

'threat_score': 0.0,

'detected_threats': [],

'validation_timestamp': datetime.now().isoformat()

}

# Size validation

if hasattr(input_data, 'shape'):

if any(dim > 10000 for dim in input_data.shape):

validation_results['detected_threats'].append('oversized_input')

validation_results['threat_score'] += 0.3

# Content validation (example for images)

if self._is_image_input(input_data):

threats = self._detect_image_threats(input_data)

validation_results['detected_threats'].extend(threats)

validation_results['threat_score'] += len(threats) * 0.2

# Statistical anomaly detection

if self._detect_statistical_anomalies(input_data):

validation_results['detected_threats'].append('statistical_anomaly')

validation_results['threat_score'] += 0.4

# Overall safety assessment

validation_results['is_safe'] = validation_results['threat_score'] < 0.5

# Log security events

if not validation_results['is_safe']:

self.logger.warning(f"Threat detected for model {model_name}: {validation_results}")

return validation_results

def _is_image_input(self, data: Any) -> bool:

"""Check if input data is an image"""

return hasattr(data, 'shape') and len(data.shape) in [3, 4]

def _detect_image_threats(self, image_data: Any) -> List[str]:

"""Detect image-specific threats"""

threats = []

try:

import numpy as np

# Convert to numpy if needed

if hasattr(image_data, 'cpu'):

img_array = image_data.cpu().numpy()

elif hasattr(image_data, 'numpy'):

img_array = image_data.numpy()

else:

img_array = np.array(image_data)

# 1. High-frequency noise detection (common in adversarial examples)

if len(img_array.shape) >= 2:

# Calculate high-frequency content using gradients

grad_x = np.gradient(img_array, axis=-1 if len(img_array.shape) >= 2 else 0)

grad_y = np.gradient(img_array, axis=-2 if len(img_array.shape) >= 2 else 0)

high_freq_energy = np.mean(np.abs(grad_x)) + np.mean(np.abs(grad_y))

# Threshold for detecting unusual high-frequency content

if high_freq_energy > 0.5: # Adjust based on data characteristics

threats.append('high_frequency_perturbation')

# 2. Statistical variance check

if hasattr(image_data, 'std'):

variance = float(image_data.std())

# Very high variance can indicate adversarial noise

if variance > 100: # Adjust threshold based on data range

threats.append('high_variance_perturbation')

# Very low variance might indicate synthetic/generated content

elif variance < 0.01:

threats.append('low_variance_synthetic')

# 3. Pixel value range analysis

if hasattr(image_data, 'min') and hasattr(image_data, 'max'):

min_val = float(image_data.min())

max_val = float(image_data.max())

# Check for values outside expected ranges

if min_val < -10 or max_val > 300: # Common image ranges

threats.append('unusual_pixel_range')

# Check for clipped values (common in adversarial attacks)

total_pixels = image_data.numel() if hasattr(image_data, 'numel') else np.prod(img_array.shape)

clipped_min = (image_data == min_val).sum() if hasattr(image_data, 'sum') else np.sum(img_array == min_val)

clipped_max = (image_data == max_val).sum() if hasattr(image_data, 'sum') else np.sum(img_array == max_val)

clipping_ratio = (float(clipped_min) + float(clipped_max)) / total_pixels

if clipping_ratio > 0.1: # More than 10% clipped pixels

threats.append('excessive_clipping')

# 4. Adversarial pattern detection using DCT

try:

from scipy.fftpack import dctn

if len(img_array.shape) >= 2:

# Apply 2D DCT to detect frequency domain anomalies

dct_coeffs = dctn(img_array.squeeze() if len(img_array.shape) > 2 else img_array)

# Check high-frequency coefficients

high_freq_coeffs = dct_coeffs[dct_coeffs.shape[0]//2:, dct_coeffs.shape[1]//2:]

high_freq_energy = np.mean(np.abs(high_freq_coeffs))

if high_freq_energy > 0.1: # Threshold for adversarial patterns

threats.append('frequency_domain_anomaly')

except ImportError:

# scipy not available, skip DCT analysis

pass

# 5. Spatial consistency check

if len(img_array.shape) >= 2:

# Check for sudden intensity changes (potential adversarial patches)

diff_h = np.abs(np.diff(img_array, axis=-2))

diff_w = np.abs(np.diff(img_array, axis=-1))

max_diff_h = np.max(diff_h)

max_diff_w = np.max(diff_w)

if max_diff_h > 200 or max_diff_w > 200: # Large intensity jumps

threats.append('spatial_discontinuity')

except Exception as e:

# If analysis fails, flag as potentially suspicious

threats.append('analysis_error')

print(f"Error in image threat detection: {e}")

return threats

def _detect_statistical_anomalies(self, data: Any) -> bool:

"""Detect statistical anomalies in input data"""

try:

# Multi-dimensional anomaly detection

if hasattr(data, 'shape') and hasattr(data, 'mean'):

# Statistical analysis for tensor data

mean_val = float(data.mean())

std_val = float(data.std())

min_val = float(data.min())

max_val = float(data.max())

# Check for extreme values

if abs(mean_val) > 1000 or std_val > 500:

return True

# Check for unusual value ranges

if min_val < -10 or max_val > 10: # Assuming normalized data

return True

# Check for NaN or infinite values

if hasattr(data, 'isnan') and hasattr(data, 'isinf'):

if data.isnan().any() or data.isinf().any():

return True

# Check data distribution (entropy-based)

if hasattr(data, 'flatten'):

flat_data = data.flatten()

if len(flat_data) > 10:

# Simple entropy calculation

import numpy as np

hist, _ = np.histogram(flat_data.cpu().numpy() if hasattr(flat_data, 'cpu') else flat_data, bins=50)

hist = hist + 1e-10 # Avoid log(0)

entropy = -np.sum(hist * np.log(hist))

# Very low entropy might indicate synthetic/adversarial data

if entropy < 1.0:

return True

# Scalar data analysis

elif isinstance(data, (int, float)):

if abs(data) > 1000 or data != data: # NaN check

return True

# String/text data analysis

elif isinstance(data, str):

# Check for suspicious patterns in text

if len(data) > 10000: # Extremely long strings

return True

if data.count('\x00') > 0: # Null bytes

return True

return False

except Exception as e:

# If we can't analyze the data, consider it suspicious

print(f"Error in anomaly detection: {e}")

return True

def generate_security_report(self) -> Dict:

"""Generate comprehensive security assessment report"""

report = {

'application_name': self.application_name,

'risk_level': self.risk_level,

'report_timestamp': datetime.now().isoformat(),

'active_controls': len([c for c in self.security_controls.values()

if c['status'] == 'active']),

'total_controls': len(self.security_controls),

'recent_threats': [log for log in self.audit_log[-10:]

if 'threat' in log.get('action', '')],

'recommendations': self._generate_recommendations()

}

return report

def _generate_recommendations(self) -> List[str]:

"""Generate security recommendations based on current state"""

recommendations = []

if len(self.security_controls) < 5:

recommendations.append("Implement additional security controls")

if self.risk_level == "high" and not any(c['type'] == 'adversarial_detection'

for c in self.security_controls.values()):

recommendations.append("Deploy adversarial detection system")

return recommendationsEmerging Threats and Future Considerations

Next-Generation Attack Vectors

- Multi-Modal Attacks: Exploiting interactions between different input modalities

- Supply Chain Poisoning: Compromising pre-trained models and datasets

- Prompt Injection: Manipulating large language models through crafted inputs

- Model Stealing: Extracting proprietary models through query-based attacks

- Quantum-Assisted Attacks: Leveraging quantum computing for cryptographic breaks

Defensive Technologies on the Horizon

Homomorphic Encryption for ML: Enables computation on encrypted data, protecting both training data and model parameters during processing.

Zero-Knowledge ML: Allows model verification without revealing training data or model internals.

Hardware-Based Security: Trusted execution environments (TEEs) and secure enclaves provide hardware-level protection for ML computations.

Automated Defense Generation: AI-powered systems that automatically generate and deploy defenses against new attack types.

Actionable Implementation Roadmap

Phase 1: Assessment and Planning (Weeks 1-4)

- Risk Assessment: Identify critical ML assets and potential attack vectors

- Baseline Security: Audit current security measures and identify gaps

- Threat Modeling: Document specific threats relevant to your application

- Team Training: Educate development teams on ML security principles

Phase 2: Core Defenses (Weeks 5-12)

- Input Validation: Implement robust input sanitization and anomaly detection

- Adversarial Training: Integrate adversarial examples into training pipeline

- Model Integrity: Deploy model verification and integrity checking

- Monitoring Systems: Set up continuous monitoring for anomalous behavior

Phase 3: Advanced Security (Weeks 13-24)

- Differential Privacy: Implement privacy-preserving training techniques

- Federated Security: Deploy secure aggregation for distributed training

- Incident Response: Establish procedures for handling security breaches

- Compliance: Ensure adherence to relevant regulations and standards

Comprehensive Implementation Example

Here’s how all the security components work together in a production environment:

import torch

import torch.nn as nn

import numpy as np

from typing import Dict, List, Any, Tuple

import logging

from datetime import datetime

class SecureNeuralNetworkSystem:

def __init__(self, model: nn.Module, config: Dict[str, Any]):

self.model = model

self.config = config

# Initialize security components

self.adversarial_trainer = AdversarialTrainer(

model,

torch.optim.Adam(model.parameters()),

epsilon=config.get('adversarial_epsilon', 0.1),

alpha=config.get('adversarial_alpha', 0.01),

iterations=config.get('adversarial_iterations', 10)

)

self.detector = AdversarialDetector(model, contamination=0.1)

self.training_pipeline = SecureTrainingPipeline(

model_name=config.get('model_name', 'secure_model'),

encryption_key=config.get('encryption_key')

)

self.security_framework = MLSecurityFramework(

application_name=config.get('app_name', 'neural_security'),

risk_level=config.get('risk_level', 'high')

)

# Setup logging

self._setup_security_logging()

# Training metrics

self.training_metrics = {

'total_epochs': 0,

'adversarial_examples_processed': 0,

'threats_detected': 0,

'security_incidents': 0

}

def _setup_security_logging(self):

"""Configure comprehensive security logging"""

self.security_logger = logging.getLogger('neural_security')

self.security_logger.setLevel(logging.INFO)

# Create file handler for security events

handler = logging.FileHandler('neural_security_events.log')

formatter = logging.Formatter(

'%(asctime)s - %(name)s - %(levelname)s - %(message)s'

)

handler.setFormatter(formatter)

self.security_logger.addHandler(handler)

def secure_training_epoch(self, train_loader, validation_loader=None) -> Dict[str, float]:

"""Execute one training epoch with full security measures"""

epoch_metrics = {

'training_loss': 0.0,

'adversarial_loss': 0.0,

'threats_detected': 0,

'samples_processed': 0

}

self.model.train()

total_loss = 0.0

for batch_idx, (data, targets) in enumerate(train_loader):

# 1. Input Security Validation

validation_result = self.security_framework.validate_input_security(

data, self.config.get('model_name', 'model')

)

if not validation_result['is_safe']:

epoch_metrics['threats_detected'] += 1

self.security_logger.warning(

f"Threat detected in training batch {batch_idx}: {validation_result['detected_threats']}"

)

continue # Skip this batch

# 2. Data Anomaly Detection

try:

anomaly_detected = self.detector.detect_adversarial(

data[0].cpu().numpy(), confidence_threshold=0.7

)

if anomaly_detected['is_adversarial']:

epoch_metrics['threats_detected'] += 1

self.security_logger.warning(

f"Adversarial input detected: {anomaly_detected}"

)

continue

except Exception as e:

self.security_logger.error(f"Error in anomaly detection: {e}")

# 3. Secure Adversarial Training

try:

# Standard training step

loss = self.adversarial_trainer.train_step(data, targets)

total_loss += loss

epoch_metrics['adversarial_loss'] += loss

epoch_metrics['samples_processed'] += len(data)

# Update metrics

self.training_metrics['adversarial_examples_processed'] += len(data)

except Exception as e:

self.security_logger.error(f"Error in training step: {e}")

epoch_metrics['threats_detected'] += 1

continue

# 4. Real-time Model Integrity Check

if batch_idx % 100 == 0: # Check every 100 batches

self._verify_model_integrity()

# Calculate average loss

epoch_metrics['training_loss'] = total_loss / len(train_loader)

# 5. Validation with Security Checks

if validation_loader:

val_metrics = self._secure_validation(validation_loader)

epoch_metrics.update(val_metrics)

# Update global metrics

self.training_metrics['total_epochs'] += 1

self.training_metrics['threats_detected'] += epoch_metrics['threats_detected']

# Log epoch completion

self.security_logger.info(f"Secure training epoch completed: {epoch_metrics}")

return epoch_metrics

def _secure_validation(self, validation_loader) -> Dict[str, float]:

"""Perform validation with security monitoring"""

self.model.eval()

val_metrics = {

'validation_accuracy': 0.0,

'validation_threats': 0,

'adversarial_robustness': 0.0

}

correct_predictions = 0

total_samples = 0

robust_predictions = 0

with torch.no_grad():

for data, targets in validation_loader:

# Security check on validation data

validation_result = self.security_framework.validate_input_security(

data, self.config.get('model_name', 'model')

)

if not validation_result['is_safe']:

val_metrics['validation_threats'] += 1

continue

# Standard accuracy

outputs = self.model(data)

predictions = torch.argmax(outputs, dim=1)

correct_predictions += (predictions == targets).sum().item()

total_samples += len(data)

# Test adversarial robustness on subset

if torch.rand(1).item() < 0.1: # Test 10% of validation data

try:

# Generate adversarial examples

adv_data = self.adversarial_trainer.pgd_attack(data, targets)

adv_outputs = self.model(adv_data)

adv_predictions = torch.argmax(adv_outputs, dim=1)

# Count robust predictions

robust_predictions += (adv_predictions == targets).sum().item()

except Exception as e:

self.security_logger.error(f"Error in robustness testing: {e}")

# Calculate metrics

if total_samples > 0:

val_metrics['validation_accuracy'] = correct_predictions / total_samples

if robust_predictions > 0:

val_metrics['adversarial_robustness'] = robust_predictions / (total_samples * 0.1)

return val_metrics

def _verify_model_integrity(self):

"""Verify model hasn't been tampered with during training"""

try:

# Compute current model hash

current_hash = self.training_pipeline._compute_model_hash(self.model)

# Log for integrity tracking

self.security_logger.info(f"Model integrity check: {current_hash[:16]}...")

# Check for unusual parameter values

for name, param in self.model.named_parameters():

if torch.isnan(param).any() or torch.isinf(param).any():

self.security_logger.error(f"Invalid parameter values detected in {name}")

self.training_metrics['security_incidents'] += 1

# Check parameter magnitudes

param_magnitude = torch.norm(param).item()

if param_magnitude > 1000: # Threshold for suspicious parameters

self.security_logger.warning(f"Large parameter magnitude in {name}: {param_magnitude}")

except Exception as e:

self.security_logger.error(f"Error in model integrity verification: {e}")

def secure_inference(self, input_data: torch.Tensor) -> Dict[str, Any]:

"""Perform secure inference with threat detection"""

# 1. Input validation and threat detection

validation_result = self.security_framework.validate_input_security(

input_data, self.config.get('model_name', 'model')

)

if not validation_result['is_safe']:

return {

'prediction': None,

'confidence': 0.0,

'security_alert': True,

'threats_detected': validation_result['detected_threats'],

'threat_score': validation_result['threat_score']

}

# 2. Adversarial detection

try:

anomaly_result = self.detector.detect_adversarial(

input_data[0].cpu().numpy(), confidence_threshold=0.5

)

if anomaly_result['is_adversarial']:

return {

'prediction': None,

'confidence': 0.0,

'security_alert': True,

'adversarial_detected': True,

'anomaly_details': anomaly_result

}

except Exception as e:

self.security_logger.error(f"Error in adversarial detection: {e}")

# 3. Secure model inference

self.model.eval()

with torch.no_grad():

outputs = self.model(input_data)

probabilities = torch.softmax(outputs, dim=1)

confidence, prediction = torch.max(probabilities, dim=1)

# 4. Confidence-based security check

conf_value = confidence.item()

if conf_value < 0.3: # Low confidence might indicate attack

self.security_logger.warning(f"Low confidence prediction: {conf_value}")

return {

'prediction': prediction.item(),

'confidence': conf_value,

'security_alert': False,

'probabilities': probabilities.tolist()

}

def generate_security_report(self) -> Dict[str, Any]:

"""Generate comprehensive security report"""

report = {

'system_status': 'operational',

'report_timestamp': datetime.now().isoformat(),

'training_metrics': self.training_metrics.copy(),

'security_framework_report': self.security_framework.generate_security_report(),

'model_integrity': 'verified',

'recommendations': []

}

# Add recommendations based on metrics

if self.training_metrics['threats_detected'] > 100:

report['recommendations'].append("High threat activity detected - review data sources")

if self.training_metrics['security_incidents'] > 0:

report['recommendations'].append("Security incidents detected - investigate model integrity")

# Security posture assessment

total_threats = self.training_metrics['threats_detected']

total_samples = self.training_metrics['adversarial_examples_processed']

if total_samples > 0:

threat_ratio = total_threats / total_samples

report['threat_ratio'] = threat_ratio

if threat_ratio > 0.05: # More than 5% threats

report['system_status'] = 'high_alert'

report['recommendations'].append("Implement additional security controls")

return report

# Example usage for production deployment

def deploy_secure_neural_network():

"""Example of complete secure neural network deployment"""

# Define model architecture

model = nn.Sequential(

nn.Linear(784, 128),

nn.ReLU(),

nn.Dropout(0.2),

nn.Linear(128, 64),

nn.ReLU(),

nn.Dropout(0.2),

nn.Linear(64, 10)

)

# Security configuration

config = {

'model_name': 'mnist_classifier',

'app_name': 'secure_mnist_system',

'risk_level': 'high',

'adversarial_epsilon': 0.1,

'adversarial_alpha': 0.01,

'adversarial_iterations': 10

}

# Initialize secure system

secure_system = SecureNeuralNetworkSystem(model, config)

# Training would happen here with secure_training_epoch()

# Inference would use secure_inference()

# Monitoring would use generate_security_report()

return secure_system

if __name__ == "__main__":

secure_system = deploy_secure_neural_network()

print("Secure Neural Network System initialized successfully")Conclusion

Neural network security represents one of the most critical challenges in modern AI deployment. As these systems become increasingly integrated into high-stakes applications, the potential impact of security vulnerabilities grows exponentially.

The key to effective neural network security lies in adopting a defense-in-depth approach that combines multiple complementary strategies:

- Proactive defenses like adversarial training and robust architectures

- Detective controls for identifying attacks in real-time

- Responsive measures for containing and mitigating damage

- Continuous improvement based on emerging threats and defensive techniques

Organizations must view ML security not as an afterthought, but as a fundamental requirement that should be integrated throughout the entire machine learning lifecycle. The cost of implementing comprehensive security measures is far outweighed by the potential consequences of successful attacks on critical AI systems.

As the field evolves, staying current with the latest research, participating in the security community, and maintaining a culture of security-first development will be essential for organizations deploying neural networks in production environments.

Essential Resources and References

Academic Research Papers

- Adversarial Examples in the Physical World - Foundational paper on adversarial attacks

- Towards Deep Learning Models Resistant to Adversarial Examples - Adversarial training methodology

- Differential Privacy for Deep Learning - Privacy-preserving ML techniques

- Byzantine-Robust Distributed Learning - Federated learning security

Industry Standards and Frameworks

- NIST AI Risk Management Framework - Comprehensive AI risk guidelines

- MITRE ATLAS - Knowledge base of ML-specific attack techniques

- ISO/IEC 23053:2022 - Framework for AI risk management

- ENISA AI Threat Landscape - EU cybersecurity perspective

Open Source Security Tools

- Adversarial Robustness Toolbox (ART) - Comprehensive defense library

- CleverHans - Adversarial example library

- Opacus - Differential privacy for PyTorch

- TensorFlow Privacy - Privacy-preserving ML tools

Professional Communities

- AI Security and Privacy - Academic conference on ML security

- OWASP Machine Learning Security - Industry security guidelines

- Partnership on AI - Cross-industry AI safety collaboration

About Neural Network Security

This guide represents current best practices in neural network security as of August 2025. The field is rapidly evolving, with new attack methods and defensive techniques emerging regularly. Organizations should establish processes for staying current with the latest research and adapting their security posture accordingly.

For questions about implementing these security measures in your specific environment, consider consulting with security professionals who specialize in machine learning applications. The complexity of ML security often requires expertise that spans both domains.

Disclaimer: The code examples provided are for educational purposes and should be thoroughly tested and adapted before use in production environments. Security implementations should always be reviewed by qualified security professionals.

You May Also Like

Building an Advanced HTTP Request Generator for BERT-based Attack Detection

A comprehensive guide to creating a sophisticated HTTP request generator with global domain support and using it to train BERT models for real-time attack detection

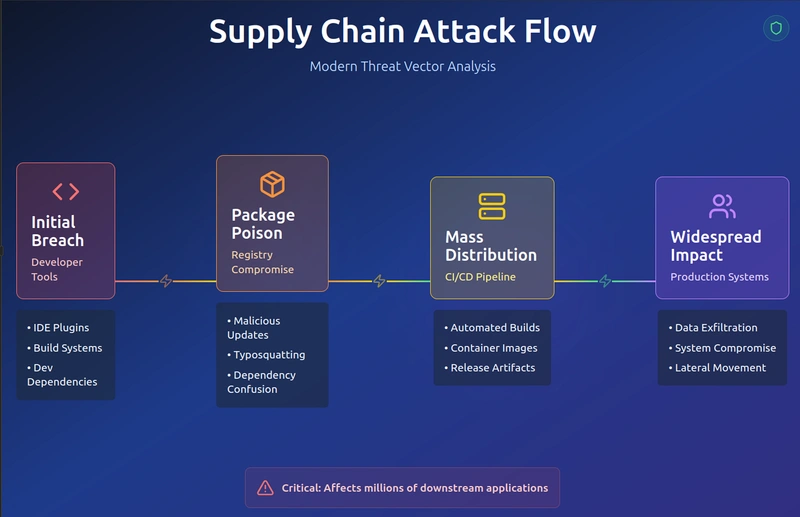

Software Supply Chain Security: Complete Defense Against Modern Attacks

Comprehensive guide to understanding, detecting, and preventing supply chain attacks across the entire software development lifecycle

AG-UI Protocol: Standardizing Event-Driven Communication Between AI and UI

AG-UI (Agent-User Interaction Protocol) is an open, lightweight protocol that standardizes how AI agents connect to front-end applications, creating a seamless bridge for real-time, event-driven communication between intelligent backends and user interfaces.