Jash Naik

AWS Strands Agents is an open-source SDK that revolutionizes AI agent development by embracing a model-driven approach, allowing developers to build production-ready agents with just a few lines of code. Released in May 2025, it represents a fundamental shift from traditional agent frameworks by leveraging the intelligence of modern language models for autonomous planning and execution.

- Open-source SDK for building AI agents

- Model-driven approach with minimal code

- Supports multiple model providers

- Built-in observability and deployment options

Why AWS Strands Agents Matter

Imagine building an AI assistant that can research topics, perform calculations, make API calls, and coordinate with other AI agents—all without writing complex workflow logic. This is the promise of AWS Strands Agents, a revolutionary open-source SDK that emerged from AWS’s internal production needs.

Released in May 2025, Strands Agents represents a fundamental shift from traditional agent frameworks. While most frameworks require developers to define rigid workflows and complex orchestration logic, Strands embraces the intelligence of modern language models to handle planning and execution autonomously. Multiple AWS teams, including Amazon Q Developer, AWS Glue, and VPC Reachability Analyzer, already rely on Strands for production workloads.

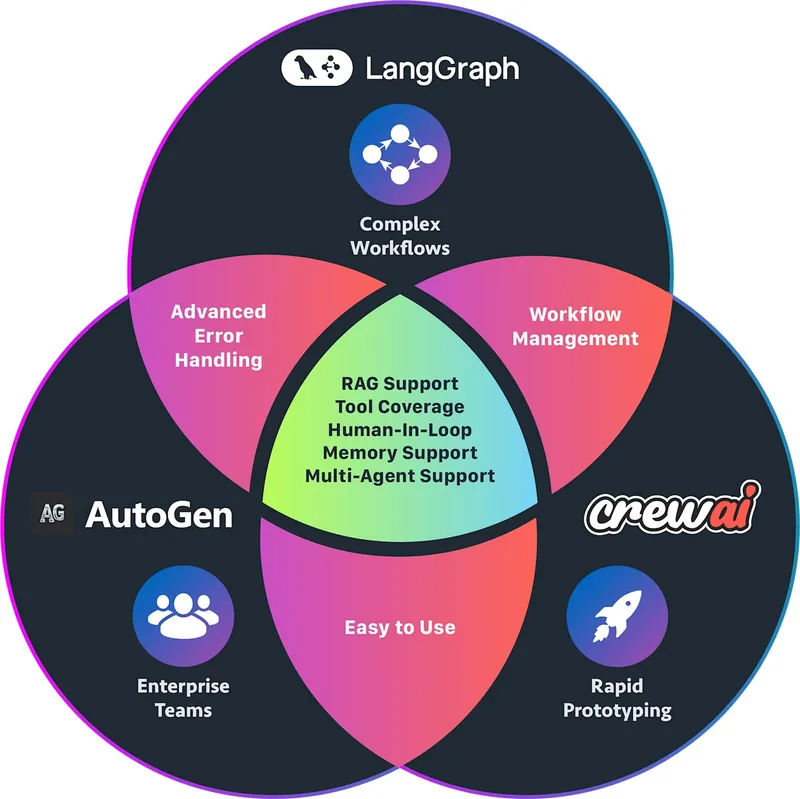

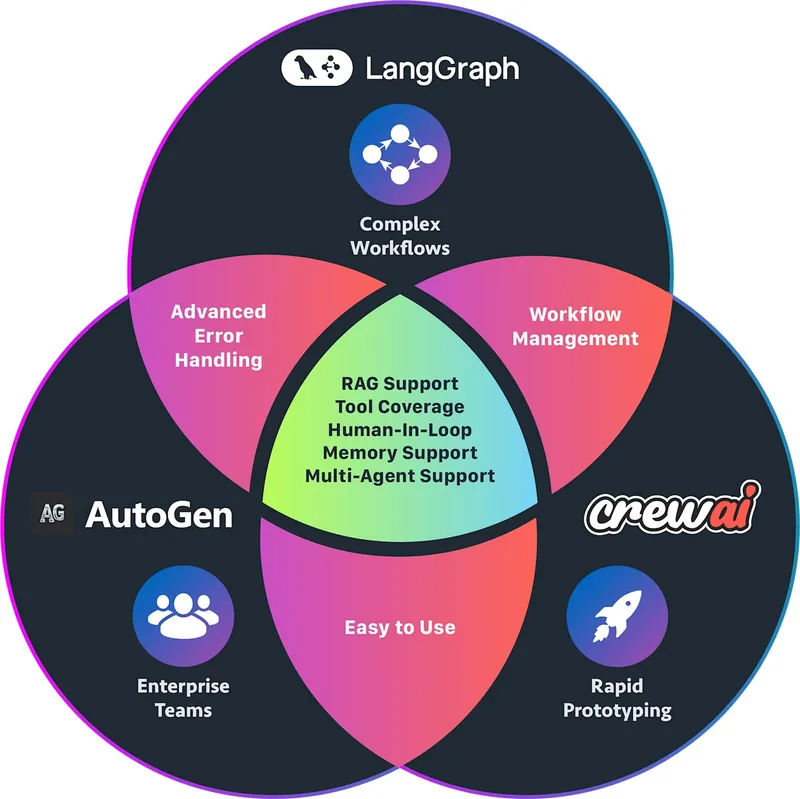

Comparison of LangGraph, AutoGen, and CrewAI agentic AI frameworks

Why AWS Strands Agents Matter

Imagine building an AI assistant that can research topics, perform calculations, make API calls, and coordinate with other AI agents—all without writing complex workflow logic. This is the promise of AWS Strands Agents, a revolutionary open-source SDK that emerged from AWS’s internal production needs.

Multiple AWS teams, including Amazon Q Developer, AWS Glue, and VPC Reachability Analyzer, already rely on Strands for production workloads. While most frameworks require developers to define rigid workflows and complex orchestration logic, Strands embraces the intelligence of modern language models to handle planning and execution autonomously.

Comparison of LangGraph, AutoGen, and CrewAI agentic AI frameworks

The framework’s name reflects its core philosophy: like the two strands of DNA, Strands connects the two essential components of any AI agent—the model and the tools. This elegant simplicity makes agent development accessible while maintaining the power needed for complex enterprise applications.

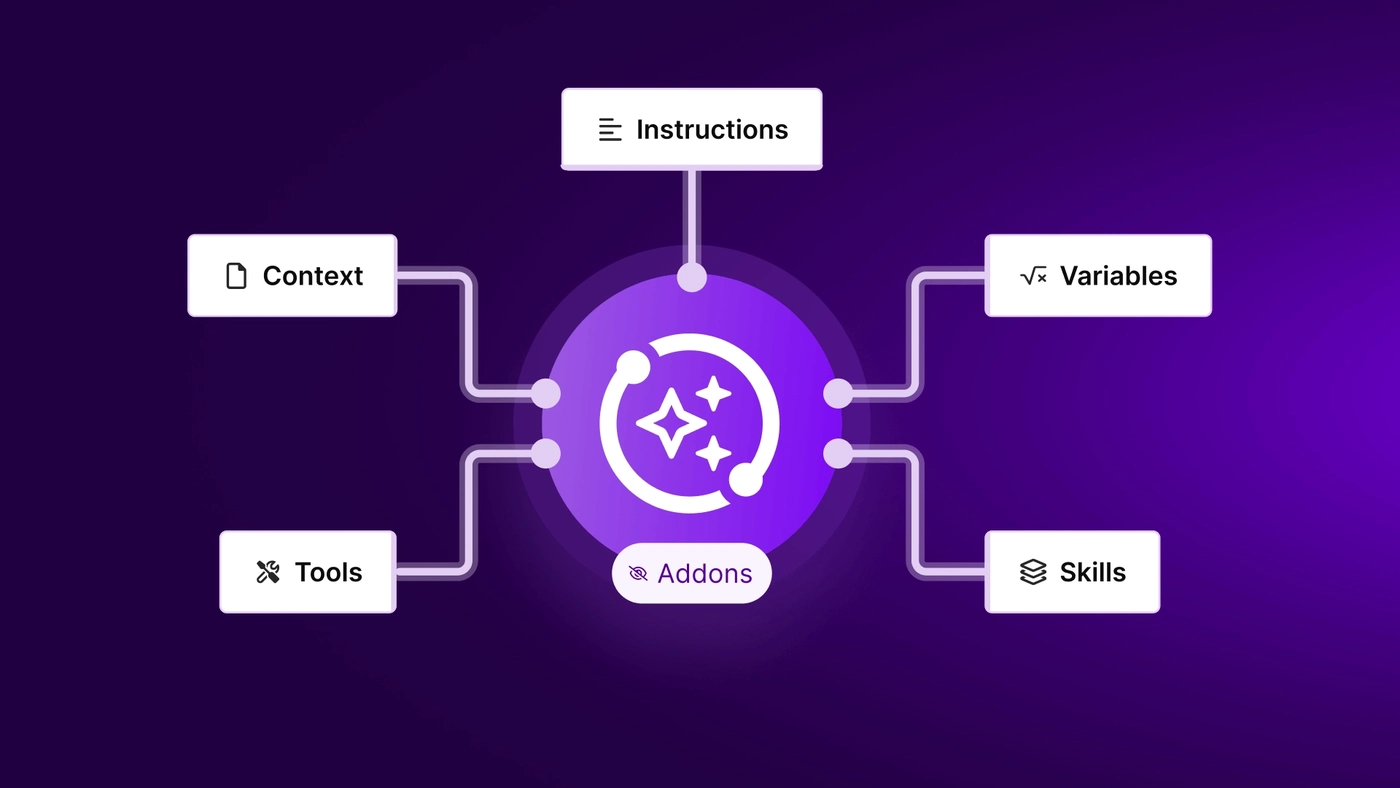

Understanding Core Concepts

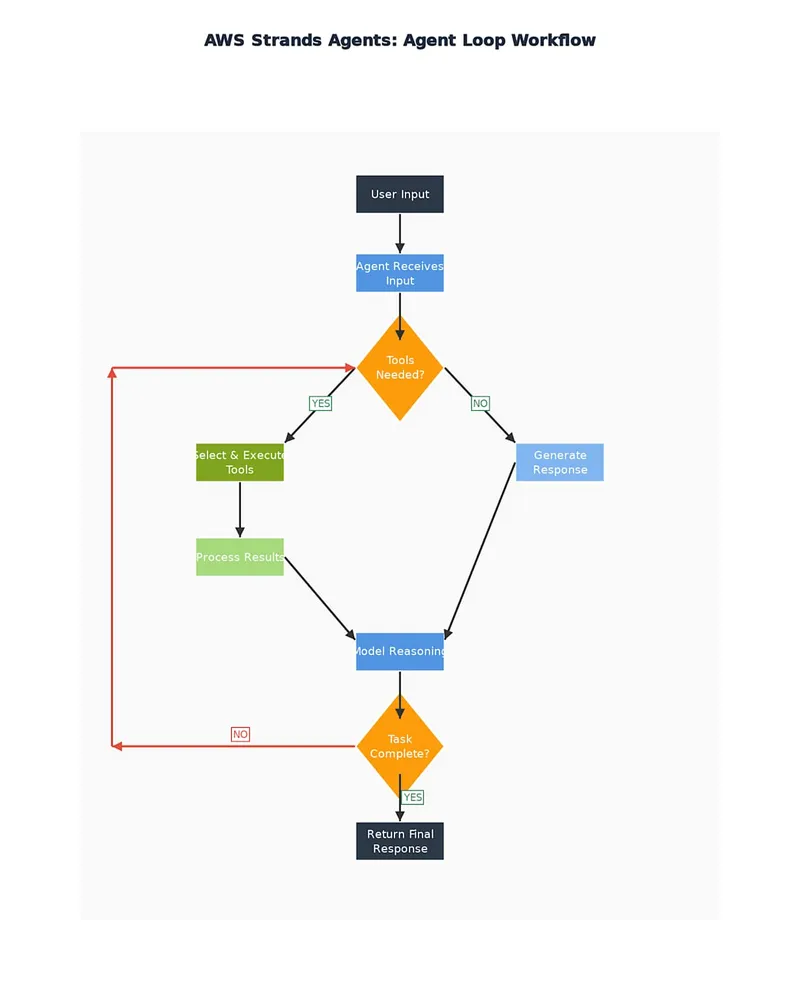

Agents & The Agent Loop

Think of a Strands agent as a thoughtful assistant who knows when to think, when to use tools, and when to respond. At its heart, an agent consists of three components: a model (the brain), tools (the hands), and a prompt (the instructions).

The agent loop operates like a continuous decision-making process. When you ask a question, the agent evaluates whether it can answer directly or needs additional information. If tools are required, the agent selects appropriate ones, processes the results, and continues reasoning until the task is complete.

AWS Strands Agents workflow showing the model-driven agent loop from user input to final response

This model-driven approach eliminates the need for hardcoded workflows that plague traditional frameworks. Instead of predicting every possible path, the agent uses the model’s reasoning capabilities to navigate complex scenarios dynamically.

Sessions, State, and Context Management

Strands agents maintain context across interactions through sophisticated session management. Each conversation preserves memory, allowing agents to reference previous exchanges and build upon earlier work. The framework automatically handles context windows, token management, and conversation history without requiring manual intervention.

Tools Integration: The Agent’s Superpowers

Tools transform conversational agents into action-oriented assistants. Strands offers multiple integration approaches:

- Built-in Tools: 20+ pre-built tools for common operations

- Python Functions: Simple @tool decorator for custom functionality

- Model Context Protocol (MCP): Access to community-built tools

Real-Time Streaming & Event Handling

The Strands Agents SDK provides two primary methods for streaming agent responses in real-time: Async Iterators and Callback Handlers. Both mechanisms allow you to intercept and process events as they happen during an agent’s execution, making them ideal for real-time monitoring, custom output formatting, and integrating with external systems.

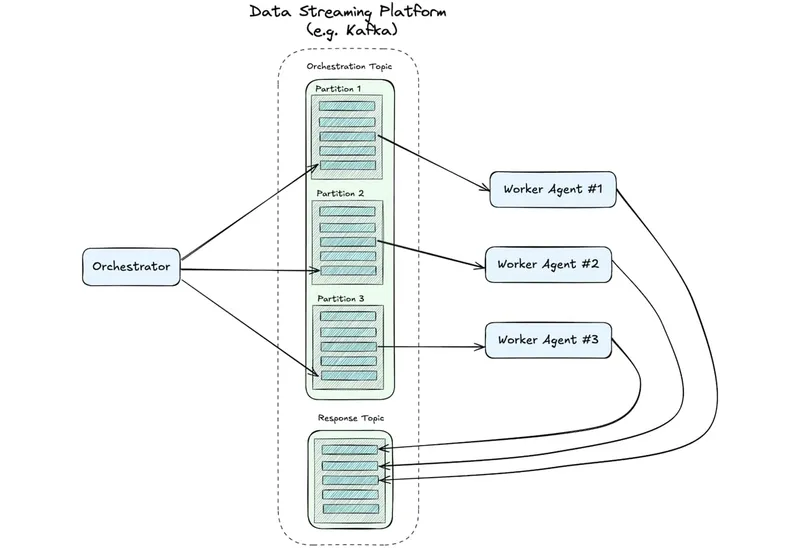

An event-driven multi-agent system using a data streaming platform

Async Iterators for Streaming

Async Iterators are designed for real-time streaming in asynchronous environments such as web servers, APIs, FastAPI, aiohttp, or Django Channels. The SDK offers the stream_async method, which returns an asynchronous iterator that yields various event types as they occur.

import asyncio

from strands import Agent

from strands_tools import calculator

# Initialize agent without a callback handler

agent = Agent(tools=[calculator], callback_handler=None)

# Async function that iterates over streamed agent events

async def process_streaming_response():

agent_stream = agent.stream_async("Calculate 2+2")

async for event in agent_stream:

print(event)

# Run the agent

asyncio.run(process_streaming_response())Callback Handlers for Streaming

Callback handlers provide another powerful approach for intercepting events during agent execution. You pass a callback function to your agent’s callback_handler parameter, and it receives events in real-time as keyword arguments.

from strands import Agent

from strands_tools import calculator

def custom_callback_handler(**kwargs):

# Process stream data

if "data" in kwargs:

print(f"MODEL OUTPUT: {kwargs['data']}")

elif "current_tool_use" in kwargs and kwargs["current_tool_use"].get("name"):

print(f"\n USING TOOL: {kwargs['current_tool_use']['name']}")

# Create an agent with custom callback handler

agent = Agent(tools=[calculator], callback_handler=custom_callback_handler)

agent("Calculate 2+2")Both methods provide access to the same event types:

- Text Generation Events:

data(text chunk),complete(final chunk indicator),delta(raw delta content) - Tool Events:

current_tool_use(information about the tool being used) - Lifecycle Events:

init_event_loop,start_event_loop,start,message,event,force_stop - Reasoning Events:

reasoning,reasoningText,reasoning_signature

Advanced Features & Multi-Agent Systems

Model Providers and Flexibility

Strands supports an impressive array of model providers, ensuring developers aren’t locked into a single ecosystem. The default configuration uses Amazon Bedrock with Claude 3.7 Sonnet, providing enterprise-grade performance and security. For alternatives, the framework seamlessly integrates with Anthropic APIs, OpenAI through LiteLLM, local models via Ollama, and Meta’s Llama models.

Multi-Agent Collaboration at Scale

Modern AI challenges often require multiple specialized agents working together. Strands excels at orchestrating these complex interactions through several architectural patterns.

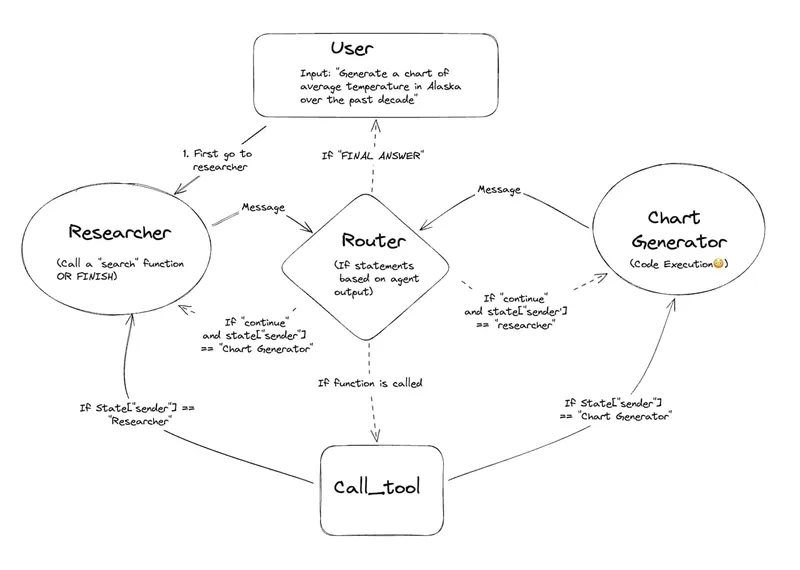

Diagram of a multi-agent workflow involving a user, researcher, router, chart generator, and call_tool function

In multi-agent networks, specialized agents collaborate as equals, each contributing unique expertise. Think of a research team where one agent gathers data, another analyzes trends, and a third generates visualizations. For structured workflows, Strands supports hierarchical arrangements where orchestrator agents delegate tasks to specialized workers.

Safety & Responsibility

As AI agents become more powerful, ensuring safe and responsible operation becomes critical. Strands incorporates multiple safety mechanisms designed for production environments:

- Input validation and output sanitization

- Integration with third-party guardrail services

- Responsible prompt engineering guidelines

- Built-in content filtering and PII detection

Observability & Evaluation

Production AI systems require comprehensive monitoring and evaluation capabilities. Strands provides enterprise-grade observability through native OpenTelemetry integration.

import { StrandsAgent } from '@strands/agent';

import { OpenTelemetry } from '@strands/observability';

const agent = new StrandsAgent({

observability: new OpenTelemetry({

serviceName: 'my-agent',

endpoint: 'http://localhost:4317'

})

});Production Deployment & Implementation

Deployment Options

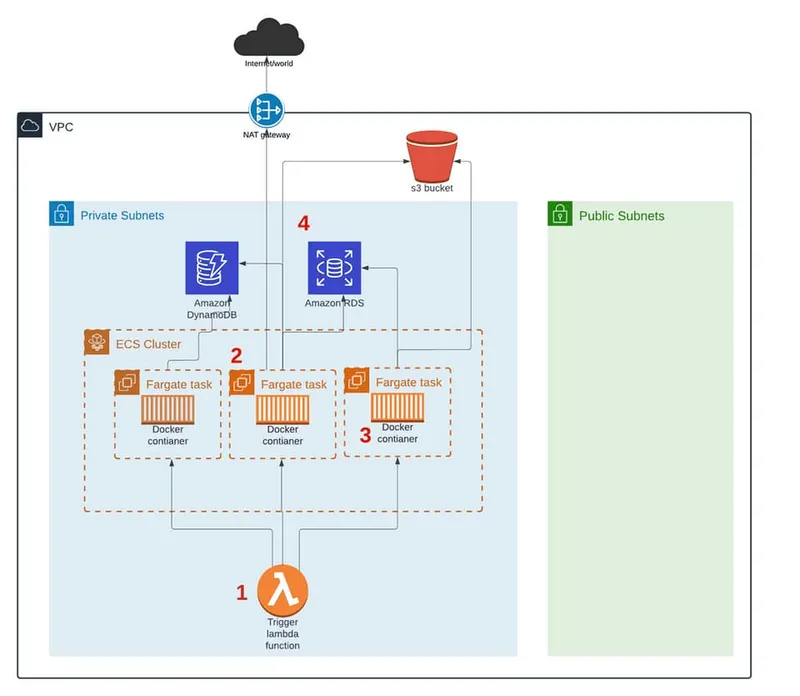

Strands agents transition smoothly from development to production across various AWS deployment options, each serving different use cases and scaling requirements.

AWS Fargate tasks triggered by a Lambda function in a serverless architecture

- AWS Lambda: Serverless simplicity for event-driven agents

- AWS Fargate: Containerized flexibility for interactive agents

- Amazon EC2: Maximum control for high-volume applications

Implementation Examples

Let’s explore practical examples that demonstrate how to build and customize AI agents using AWS Strands Agents SDK.

Basic Agent Setup

from strands_agents import Agent, BedrockModel

# Initialize the model (Claude 3.7 Sonnet via Bedrock)

model = BedrockModel(model_id="anthropic.claude-3-sonnet-20240229-v1:0")

# Create the agent with a system prompt

agent = Agent(

model=model,

system_prompt="You are a helpful assistant. Answer clearly and concisely."

)

# Interactive session

print("Type 'exit' to quit.")

while True:

user_input = input("You: ")

if user_input.lower() == "exit":

break

response = agent(user_input)

print("Agent:", response)Custom Tools Integration

from strands_agents import Agent, BedrockModel, tool

from strands_agents_tools import http_request

@tool

def calculator(expression: str) -> str:

"""Evaluates a mathematical expression."""

try:

return str(eval(expression))

except Exception as e:

return f"Error: {e}"

# Create agent with multiple tools

agent = Agent(

model=BedrockModel(model_id="anthropic.claude-3-sonnet-20240229-v1:0"),

system_prompt="You are a math and weather assistant. Use tools appropriately.",

tools=[calculator, http_request]

)

# Example queries

print(agent("What's 15 * 12?")) # Uses calculator

print(agent("What's the weather in New York?")) # Uses http_requestAdvanced Context Management

from strands_agents import Agent, BedrockModel, SlidingWindowConversationManager

# Custom window size (keep last 10 messages)

conversation_manager = SlidingWindowConversationManager(window_size=10)

agent = Agent(

model=BedrockModel(model_id="anthropic.claude-3-sonnet-20240229-v1:0"),

conversation_manager=conversation_manager,

system_prompt="You are a context-aware assistant."

)

# The agent will maintain context within the window size

print(agent("What's the capital of France?"))

print(agent("What's its population?")) # Agent remembers we're talking about ParisMulti-Agent Collaboration

from strands_agents import Agent, BedrockModel

from strands_agents_tools import http_request, retrieve

# Research agent with web access

research_agent = Agent(

model=BedrockModel(model_id="anthropic.claude-3-sonnet-20240229-v1:0"),

system_prompt="You are a research assistant. Use http_request for web queries.",

tools=[http_request]

)

# Analysis agent

analysis_agent = Agent(

model=BedrockModel(model_id="anthropic.claude-3-sonnet-20240229-v1:0"),

system_prompt="You analyze research findings and summarize them."

)

# Workflow: research, then analysis

query = "Summarize the latest news on renewable energy."

research_result = research_agent(query)

summary = analysis_agent(f"Summarize this: {research_result}")

print(summary)Production Deployment

from strands_agents import Agent, BedrockModel

from strands_agents_tools import http_request

model = BedrockModel(model_id="amazon.nova-micro-v1")

agent = Agent(

model=model,

system_prompt="You are a weather assistant. Use the http_request tool to fetch live weather data.",

tools=[http_request]

)

def lambda_handler(event, context):

user_query = event.get("query", "")

return {"response": agent(user_query)}Complete Full-Featured Agent

from strands_agents import Agent, BedrockModel, tool, SlidingWindowConversationManager

from strands_agents_tools import http_request

@tool

def calculator(expression: str) -> str:

try:

return str(eval(expression))

except Exception as e:

return f"Error: {e}"

# Custom context window (last 20 messages)

conversation_manager = SlidingWindowConversationManager(window_size=20)

agent = Agent(

model=BedrockModel(model_id="anthropic.claude-3-sonnet-20240229-v1:0"),

system_prompt="You are an assistant who can calculate and fetch data from the web.",

tools=[calculator, http_request],

conversation_manager=conversation_manager

)

print("Start chatting! (type 'exit' to quit)")

while True:

user_input = input("You: ")

if user_input.lower() == "exit":

break

response = agent(user_input)

print("Agent:", response)- Automatic session and context management

- Easy tool integration with @tool decorator

- Custom conversation management for advanced use cases

- Multi-agent workflows for complex tasks

- Production-ready deployment options

Conclusion

AWS Strands Agents represents a paradigm shift in AI agent development, prioritizing simplicity without sacrificing power. By embracing the reasoning capabilities of modern language models, Strands eliminates the complexity that has historically made agent development challenging.

The framework’s AWS-native design, comprehensive tooling ecosystem, and production-ready features make it an excellent choice for developers looking to build real-world AI applications. Whether you’re creating simple assistants or complex multi-agent systems, Strands provides the foundation for success.

Resources

You May Also Like

Building AI Applications with MCP: A Complete Guide Using LangChain and Gemini

Learn to build intelligent AI applications using Model Context Protocol (MCP) with LangChain, langchain-mcp-adapter, and Google Gemini models. From setup to deployment, create a fully functional CLI chat application.

AG-UI Protocol: Standardizing Event-Driven Communication Between AI and UI

AG-UI (Agent-User Interaction Protocol) is an open, lightweight protocol that standardizes how AI agents connect to front-end applications, creating a seamless bridge for real-time, event-driven communication between intelligent backends and user interfaces.

Building an Advanced HTTP Request Generator for BERT-based Attack Detection

A comprehensive guide to creating a sophisticated HTTP request generator with global domain support and using it to train BERT models for real-time attack detection